Ahh another year, another update to EF Core. Lucky us! Remember when Microsoft first released Entity Framework in 2008 and many worried that it would be yet another short-lived data access platform from Microsoft? (Note that ADO.NET is still widely used, maintained, and supported!) Well, it's been 13 years, including EF's transition to EF Core and it just keeps getting better and better.

You may have heard me refer to EF Core 3 as the “breaking changes edition.” In reality, those breaking changes set EF Core up for the future, as I relayed in "Entity Framework Core 3.0: A Foundation for the Future", covering the highlights of those changes. The next release, EF Core 5 (following the numbering system of .NET 5), built on that foundation. I also wrote about this version in "EF Core 5: Building on the Foundation".

And now here comes EF Core 6. My perspective on it is that the team has been working on their (and your) bucket list! Digging into improvements to EF Core that they've been wanting to get to for quite a long time but there were more pressing features and fixes to focus on. But they didn't only work on their own goals. In advance of planning, the team put out a survey to gauge usage of existing versions of EF and EF Core and what they should focus on going forward. They presented the results from about 4000 developers in this January 2021 Community Standup: https://www.youtube.com/watch?v=IiAS61uVDqE. The survey was available before EF Core 5 was released and into only its first few months. So it was not surprising that EF Core 5 trailed behind EF Core 3 and EF 6. There were a substantial number of devs still using EF6. This makes a lot of sense to me for the many legacy apps out there: If it ain't broke, don't fix it.

The team has again been incredibly transparent about their goals and progress. In the docs (https://docs.microsoft.com/en-us/ef/core/what-is-new/ef-core-6.0/plan), they shared their high-level plan, updates of what's new for each preview, and their (very short) list of breaking changes. Late in the development cycle, it was sad to see the team come to grips with some plans they had to give up on for this version of EF Core 6 and change their GitHub milestones to “punted for EF Core 6.” But as developers, we all understand how this goes. The GitHub repo tracks every issue with detail and tags which preview/version the issue is tied to. There are also detailed bi-weekly status updates at https://github.com/dotnet/efcore/issues/23884. And triggered by the lockdown, the team got online every few weeks with the EF Core Community Standups, showing us what they're working on and inviting guests to share their additions to the EF Core ecosystem. Here's a shortcut to the full playlist of their standups where you can also keep up with upcoming shows (https://bit.ly/EFCoreStandups).

There were monthly releases of preview builds available on NuGet (https://www.nuget.org/packages/Microsoft.EntityFrameworkCore) And GitHub repository's readme (https://github.com/dotnet/efcore#readme) led to detailed instructions on how to work with daily builds if you wanted to do that.

Although there have been a lot of small changes, this article will cover some of the most notable and most impactful changes that you'll find in EF Core 6.

Huge Gains in Query Performance

Top on the EF Core wish list for so many developers and the EF team was query performance. This has always been a criticized problem with EF and EF Core even though it has dramatically improved over the years. Jon P Smith, EF Core book author and a real expert, explains that the work he does for performance tuning is “not because EF Core is bad at performance, but because real-world application has a lot of data and lots of relationships (often hierarchical), and it takes some extra work to get the performance the client needs.” Even with lots of great strategies for perf tuning EF, the team set out to really raise the bar on performance with EF Core 6 with laser-like focus and with Shay Rojansky leading up the effort.

An early step was to set a goal to improve EF Core's standing in a commonly known software industry standard benchmark for measuring the performance - the TechEmpower Web Framework Benchmarks (https://www.techempower.com/benchmarks/). The benchmark comes with tweakable source code and is used by hundreds of frameworks, including ASP.NET and EF Core. Among the comparisons are some that focus on data access and one in particular, called Fortunes, which “exercises the ORM, database connectivity, dynamic-size collections, sorting, server-side templates, XSS countermeasures, and character encoding.” I was more than surprised to discover that there are almost 450 ORMs on the list - although only 30 are .NET ORMs! The TechEmpower benchmark provides a very specific set of standards (and source code) for how to set up and run the tests, including standard hardware requirements. The version that the ASP.NET team uses is on GitHub at https://github.com/aspnet/Benchmarks.

For EF Core 6, most of the performance improvements were aimed at non-tracking queries, although tracked queries certainly benefited. Rojansky tells me that they hope to deepen their focus on change-tracked queries in EF Core 7.

EF Core is often compared in performance to Dapper, a widely used micro-ORM for .NET built by the folks over at StackOverflow. At the start, there was a 55% gap between Dapper's rows returned per second and that of EF Core 5. There was a series of categories for improvements. Before even looking at the EF Core APIs, it turned out that the benchmark itself was begging for some tweaks to make comparisons more equitable. Tuning EF's DbContext pooling (23% improvement), PostgreSQL connection pooling (2.8% improvement), and requiring results rather than wasting time with null checks (1.7% improvement) already had a significant impact. Another interesting benefit was switching the benchmark app to use .NET 6 instead of .NET 5 (9.8% improvement). Finally, it was time to dig into fine tuning EF Core itself. Changes were made to how logging works, how related data is tracked, changing concurrency detection to Opt-In and a few other tweaks added up to another significant gain. (Let's not forget shout outs to Nino Floris and Nikita Kazmin for contributions to this work.)

In the end, the gap between EF Core and Dapper was reduced from 55% to 4.5% and the overall speed of EF Core's queries based on the Fortunes benchmark improved by 70%.

This is truly commendable work and the team is thrilled to finally have had time to focus on this. You can read a detailed blog post by Rojansky at https://devblogs.microsoft.com/dotnet/announcing-entity-framework-core-6-0-preview-4-performance-edition/ and see how all of this was put together in this GitHub issue: https://github.com/dotnet/efcore/issues/23611.

Improved Startup Performance with Compiled Models

Another area for performance improvement that was highlighted by the survey was the need to pre-compile the models described by a DbContext. This goes all the way back to the very first version of Entity Framework; there were some mechanisms for this over the years in EF but not in EF Core. And “startup” is not exactly the correct word. The basic workflow about how EF gets going in an application hasn't changed much over the years. At runtime, EF has to read the DbContext and relevant entities along with any data annotations and fluent configurations to build an in-memory version of the data model. This doesn't happen when you first instantiate that context, but the first time you ask the context to do something. And that only happens once per application instance. How many people have “tested” EF performance by creating an app that instantiates a context, does ONE thing, and then exits? And then writes a rant about the terrible performance of EF? Well, that's because they're incurring the startup cost of that context every single time.

With a small model in an application - or perhaps a variety of small models - that initial startup cost will most likely be undetectable. But you may realize some benefit from compiled models in serverless apps with multiple instances, on devices with minimal resources or even when repeatedly debugging your app while you're working on it.

The EF team ran a variety of performance tests using a rather large and complex model with not only a lot of entities, but a lot of relationships and a lot of non-conventional configurations. In one of the EF Community Standups, Arthur Vickers demoed an app that leverages BenchmarkDotNet (https://benchmarkdotnet.org) to iterate the calls, then gather and report the timings. The startup time for that first use of a DbContext ran a little more than 10 times faster when using the compiled model than when simply allowing EF Core to work out the model at runtime.

I'll show you how easy it is to pre-compile a model and have your app use that. There are two steps: compiling the model and then using the compiled model.

Compiling your model is simply a matter of running a CLI command against your DbContext.

In the CLI, the command is:

dotnet ef dbcontext optimize

The PowerShell version of the command for use in Visual Studio's Package Manager Console is

Optimize-DbContext

By default, this creates a CompiledModels folder and generates the compiled model files in that folder. You can specify your own folder name with the -outputdir parameter, if you prefer. There are files for each entity describing all its configurations in one place, a file for the model itself, and a file that exposes the logic for building that particular model with its entities. In my case, those files are named AddressEntityType, PersonEntityType, PersonContextModel, and PersonContextModelBuilder.

Now, all of these new classes are part of your project. The last puzzle piece is to let the DbContext know to just use a compiled model instance rather than go through the process of reading all of the various sources of info it normally uses to build up its understanding of the model. You'll do this in the context's OnConfiguring class the DbContextOptionsBuilder.UseModel, passing in the type defined in those final two files.

optionsBuilder

.UseModel(PeopleContextModel.Instance)

.UseSqlite("Data Source=MyDatabase.db");

This essentially short circuits the OnModelCreating method. The parameter is the Instance property of the generated model file. Internally, EF Core calls the Initialize method and triggers the fast runtime creation of the model using the streamlined classes.

You can watch Vickers demo his own sample app (https://github.com/ajcvickers/CompiledModelsDemo) in the Community Standup from EF Core team (https://youtu.be/XdhX3iLXAPk). This is where I initiated my own education on compiled models. Additionally, you can hear team members Rojansky and Andriy Svyrid talk about additional benefits, starting at about 40 minutes, in the video.

Update Databases with Stand-Alone Executable Migrations

Migrations has been a key feature for EF's “code first” support since the early days. You define the domain models for your software and migrations and then determine how to apply those models and changes to the database schema. The API for migrations is dependent on EF and .NET APIs. Executing them in your CI/CD pipeline or just outside of development is tricky. You do have runtime methods to run migrations, such as the Database.Migrate() method. However, this comes with a critical caveat. If your app is a Web or serverless app (or API) where you may have multiple instances that point to a common database, there's a chance of hitting some damaging race conditions if multiple instances are concurrently attempting to migrate that database. The same problem exists if you're using Docker containers to manage application load.

A typical path for solving this problem is to let migrations generate SQL for you and use another mechanism for executing the SQL, for example, on production databases.

Migrations Bundles were introduced in EF Core 6 to provide another tool in the DevOps quiver to solve this problem. A Migration Bundle is a self-contained executable that can be run on a variety of CLIs: PowerShell, Docker, SSH, and more. It only requires that the .NET Runtime be available, but you don't have to install the SDK or any of the EF Core packages. Further, you have the option of creating a totally standalone version. Therefore, you can run it outside of your application and have it as an explicit step in your pipelines.

Creating the bundles is fairly simple, and, in fact, is merely another command in the Migrations API.

In the dotnet CLI, the command extends from migrations.

dotnet ef migrations bundle

In Visual Studio's Package Manager Console it's:

Bundle-Migration

The command has additional parameters to force the runtime to be included in the exe (making it truly self-contained) as well as familiar parameters to specify the DbContext, project, and startup project to use.

The resulting file is an executable named efbundle (with an extension driven by the operating system you're running on) and is dropped into project's path. You can specify the name of the resulting file with the -o/--output parameter.

The bundle file is idempotent. It includes all of the migrations, but it checks the database and doesn't run any of the migrations that have already been applied.

Your first test will most likely be directly on your development computer after creating the bundle. In my case, that was initially in the CLI on my MacBook where I had just called the migrations bundle CLI command - in my project's folder. On macOS, in the directory where the bundle and the project live, you'll run the bundle by typing ./efbundle. That tells the OS to run it from the current directory, not by traversing the system's PATH file. In Windows, you can just run bundle.

Bundle, in this case, will be able to find the connection string for the database as if I were running dotnet ef database update whether it's defined in a DbContext file, a startup configuration, or appsettings file. In fact, efbundle is running the database update command on your behalf.

In your production or CI/CD pipeline you'll more likely want to pass in the --connection parameter.

./efbundle --connection "DataSource=xyz.db"

This example is still calling a build from the command line. But you can extrapolate to your DevOps tool of choice, like calling efbundle from, for example, a Dockerfile that's getting its connection string from a Docker env variable.

Support for Temporal Tables

Support for what? Yep, I'd literally never heard of (or perhaps remembered hearing of) temporal tables until this feature bubbled up in the EF Core 6 plans. But now that I'm aware of them, I can see why this was a highly requested feature for EF Core! Temporal tables are described in the SQL standards and have implementations in several databases, such as MariaDB, Oracle, PostgreSQL, and SQL Server. Interestingly, IBM DB2 implemented their own twist on temporal tables. SQL Server has supported them since SQL Server 2016 and refers to them as “system-versioned temporal tables.”

Temporal tables are an automated way for a database to provide audit trails. When you designate a table as a temporal table, every time any data is changed in that table, the data that it's replacing is automatically stored in something that's akin to a sub-table, which is the temporal table's history component. The temporal table must include two date columns (in SQL Server, these must be datetime2) that signify the start and end moments when the values in that row were true. They are demarcated as “System Time” columns. Not only will those always be tracked in the main table, but it's transferred to the history table as well. You can learn more about temporal tables from the SQL Server perspective at docs.microsoft.com/en-us/sql/relational-databases/tables/temporal-tables. And thanks to EF Core, you don't have to worry about setting this up! Migrations will take care of it, as you'll read further on.

The main table always has the current state and is no different than any other table in that regard. But it's possible to query that table and include results from the historical data. This means you can find out what the state of data is at any given point in time. In SQL Server, there's a FOR_SYSTEM_TIME clause with a number of sub-clauses that triggers SQL Server to involve the history table.

EF Core 6 supports temporal tables in two ways. The first is for configuration. If you flag an entity as mapping to a temporal table, this triggers migrations to create the extra table columns and history table.

The mapping is configured as a parameter of the ToTable mapping with an IsTemporal method:

modelBuilder.Entity<Person>()

.ToTable(tb => tb.IsTemporal());

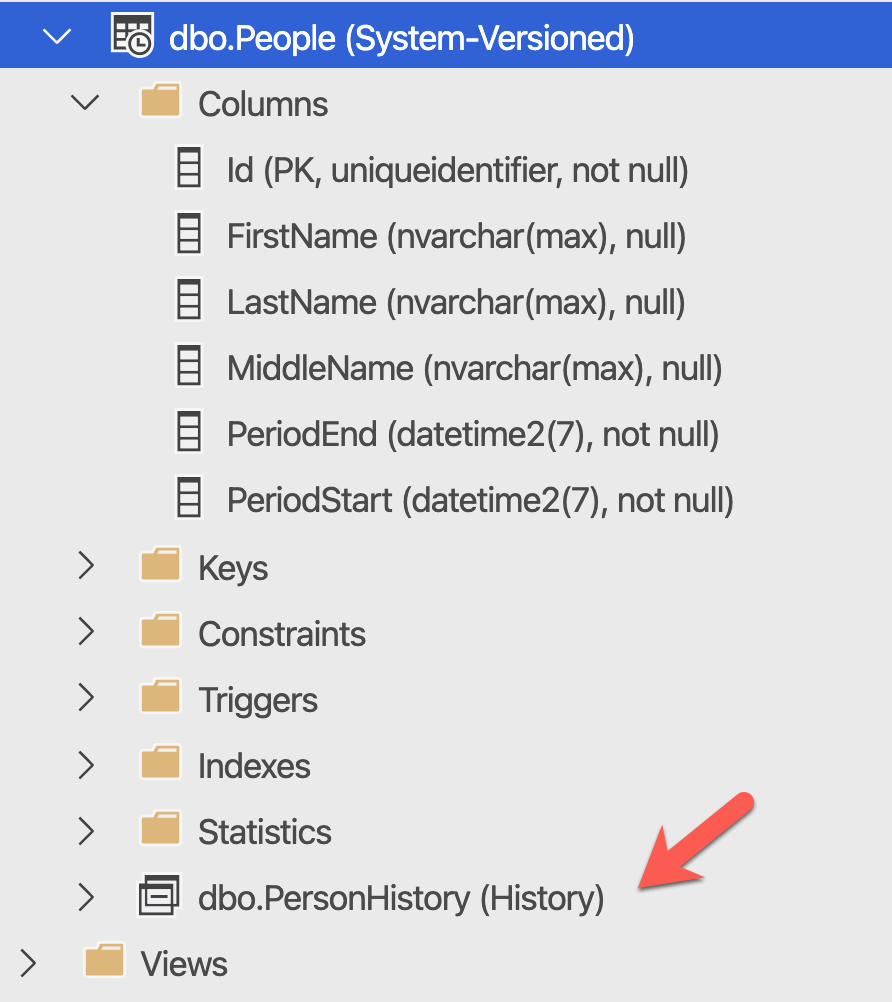

The migration created based on this automatically adds in the datetime2 properties (PeriodEnd and PeriodStart) as shadow properties, along with a number of relevant annotations. There's also a set of annotations on the table, including one for specifying a history table. You can see the migration file in this article's downloads.

Listing 1 shows the TSQL generated by the migration, which creates the People temporal table. Note that by the final release of EF Core 6, there may be a slight change to this, with the PeriodStart and PeriodEnd columns being flagged as HIDDEN, thereby a SELECT * query would not return those columns.

Listing 1: TSQL created by a migration defining a temporal table

DECLARE @historyTableSchema sysname = SCHEMA_NAME()

EXEC(N'CREATE TABLE [People] (

[Id] uniqueidentifier NOT NULL,

[FirstName] nvarchar(max) NULL,

[LastName] nvarchar(max) NULL,

[MiddleName] nvarchar(max) NULL,

[PeriodEnd] datetime2 GENERATED ALWAYS AS ROW END NOT NULL,

[PeriodStart] datetime2 GENERATED ALWAYS AS ROW START

NOT NULL,

CONSTRAINT [PK_People] PRIMARY KEY ([Id]),

PERIOD FOR SYSTEM_TIME([PeriodStart], [PeriodEnd])

) WITH (SYSTEM_VERSIONING = ON (HISTORY_TABLE = [' +

@historyTableSchema + N'].[PersonHistory]))');

GO

Letting migrations create the table, you can see how it works in Figure 1. The People table is tagged as a “System-Versioned” table and it contains a sub-table called PersonHistory, which is tagged as a History table. The columns (not shown) in the history table are an exact match of the People table columns. Having migrations set this all up for you is truly a convenience.

Also note that the names for the tracking columns and history table are defaults. There are additional mappings that let you configure the column and table names as well.

Now, onto the data. As you update or delete data from the People table, SQL Server sets the values of the PeriodStart and PeriodEnd columns accordingly and adds a new row to PeopleHistory with the state of the data before those changes. It also sets the PeriodStart and PeriodEnd columns in the history table.

Queries that involve temporal data are referred to as “time travel” queries. Note that you don't explicitly query that History table. Instead, SQL Server includes it as needed.

Here's a simple TSQL query example for you, where I'm also explicitly pulling in the temporal data columns because I'm a curious cat:

SELECT TOP (1000) [Id],[FirstName],[LastName],[MiddleName],[PeriodEnd],[PeriodStart]

FROM [TemporalTest].[dbo].[People]

FOR System_Time AS OF '2021-08-19 18:19:00'

When the main table data is more recent than the System_time I requested, SQL Server also looks in the History table. Fiddling with different System_Time predicate values, I can see different variations of the results data as I add and edit them along the way. I can even see data that was deleted after the AS OF date.

And now to EF Core's queries. There are new LINQ methods and expressions to support temporal tables.

- TemporalAsOf

- TemporalAll

- TemporalBetween

- TemporalFromTo

- TemporalContainedIn

The following query uses TemporalAsOf to return the People data as of the same time as I did using SQL, time traveling back to the state as of August 19, 2021 at 18:19:00 UTC.

var date=DateTime.Parse("2021-08-19 18:19:00");

var result=_context.People.TemporalAsOf(date).ToList();

The log shows that EF Core and the provider transformed this into the following TSQL, essentially the same that I had hand-coded.

SELECT [p].[Id], [p].[FirstName],[p].[LastName], [p].[MiddleName],

[p].[PeriodEnd], [p].[PeriodStart]

FROM [People]

FOR SYSTEM_TIME

AS OF '2021-08-19T18:19:00.0000000'

AS [p]

The result contained the same data I saw before, including the row that had subsequently been deleted and the original data prior to any edits.

This support makes it fairly easy to leverage temporal tables, thanks to migrations creating the proper schema and LINQ creating the relevant SQL. I can see why those who've been using temporal tables for years had surfaced this as one of the more highly requested features for EF Core.

There are a few important notes to keep in mind. First, to avoid side effects, some of EF Core's temporal LINQ methods inject AsNoTracking into the query because, as Rojansky explains, it's impossible to track multiple instances of the same data. However, the TemporalAsOf method is able to return tracked results. Second, these methods are only available on DbSet. Third, EF Core will treat the PeriodEnd and PeriodStart details as shadow properties allowing you to always force them to return using EF.Property() in a query. And finally, the TemporalAsOf method will propagate to any related entities (mapped to temporal tables) in a query for example if they are included or projected.

Bulk Conventions and Bulk Value Conversions

Specifying a convention for a particular type in your model has been possible but a little cumbersome and not at all discoverable. You had to drill into the model's metadata in the OnModelBuilding method.

foreach (var e in

modelBuilder.Model.GetEntityTypes())

{

foreach (var prop in e.GetProperties ().Where (p =>

p.ClrType == typeof (string)))

{

prop.SetColumnType ("nvarchar(100)");

}

}

The team revamped how models are configured under the covers, referred to as pre-convention model configuration (https://github.com/dotnet/efcore/issues/12229). In doing so, they were able to simplify your interaction with the APIs. With that change, you now have a way to define “bulk” conventions that's so much simpler that I literally found it heart-warming.

Note that custom conventions didn't make it into EF Core 6 but we're likely to get them in the next version. Read more in this GitHub issue: https://github.com/dotnet/efcore/issues/214.

The revamped model builder gives DbContext a new virtual method called ConfigureConventions along with a ModelConfiguratonBuilder class. This new class has methods for bulk configurations for properties, as well as some other methods such as IgnoreAny. ModelConfigurationBuilder.Properties exposes a number of configurations similar to ones you have via ModelBuilder.Entity().Properties.

You need to override the ConfigureConventions method, as you do with OnModelBuilding and OnConfiguring. In this case, I'll use Properties.HaveColumnType, which works like HasColumnType but more generically.

protected override void ConfigureConventions(

ModelConfigurationBuilder configurationBuilder)

{

configurationBuilder.Properties<string>().HaveColumnType

("nvarchar(100)");

}

The other methods for configuring properties are AreFixedLength, AreUnicode, HaveAnnotation, HaveConversion, HaveMaxLength, HavePrecision and UseCollation.

From that list, I want to call out the HaveConversion method. This is related to value converters that were introduced in EF Core 2.1. In fact, I wrote about them in my CODE Focus article about EF Core 2.1 (https://www.codemag.com/article/1807071). Value converters allow you to configure mappings for CLR (or even your own) types that don't have direct translations to data types. There are a number of ways to express a conversion, but one frustrating drawback has been that you could only define a conversion for a single property. If you have many properties of the same type throughout your model, you have to write an explicit conversion for every single one. For comparison, here are two examples for converting individual properties.

modelBuilder.Entity<Address>()

.Property(a=>a.AddressType)

.HasConversion<string>();

modelBuilder.Entity<Address>()

.Property(ad=>ad.StructureColor)

.HasConversion(c=>c.ToString(), s=>Color.FromName(s));

The first persists an enum (AddressType) as a string rather than the conventional mapping of an integer, e.g., “Home” instead of 1. The second persists a property that's a System.Drawing.Color as the string name of the color and then transforms that string back to a Color type when it's read from the database. Having such an easy way to persist Color was a revelation when value converters were first introduced.

Now with configuration builders, you can be sure that any AddressType enum found throughout your model will get persisted as a string.

configurationBuilder.Properties<AddressType> ()

.HaveConversion<string> ();

The signature I used for transforming the color, where I pass in an expression describing how to save the data and another for how to materialize the data from the database, isn't valid with HaveConversion. And that's for an interesting reason, as explained to me by EF team member, Andriy Svyryd. Even if the API exposed it, the precompiled model feature won't be able to read it. Instead, you need to use a more explicit path, which means building a custom ValueConverter. I'll include the code for my custom ColorToStringConverter class in the article's download.

With that class in play, I can now add another configuration to ConfigureConventions to leverage the new ColorToStringConverter class, ensuring that all Color properties throughout the model will be persisted as strings.

configurationBuilder.Properties<Color>()

.HaveConversion<ColorToStringConverter>();

If you have a particular property that shouldn't follow the that bulk conversion rule, you can specify it as a null conversion in this way:

modelBuilder.Entity<Address>()

.Property(ad=>ad.SomeProperty)

.HasConversion((ValueConverter?)null);

Be sure not to do that in the case of properties like Color that have no conventional way to map to data types.

I'll share one last note about type mapping for a less common use case. The case is when you're building a query using a custom type that EF Core can't map to a data type. The DefaultTypeMapping method helps solve this. For an example of how to use this, check out the test named “Can_use_custom_converters_without_prop” in this functional test class (https://bit.ly/TypeMapTest) in the EF Core GitHub repository.

More EF6 Parity for Fans of GroupBy in Queries

The team is working to narrow the gap between EF6 and EF Core to make it easier for developers to transition old applications if needed or apply their existing knowledge to new ones. In the category of querying, GroupBy has not been given quite as much love since the early days of EF Core. But there are now three additional query capabilities involving GroupBy and aggregates that are part of EF Core 6.

The first is that if you have navigations defined on our entities and you want to drill into those navigation objects after grouping, EF Core was unable to achieve that. The workaround was to break your query up and then pull the results together after the fact. Now it's possible to achieve that. For example, imagine that you have a model with authors and books with a one-to-many relationship between an author and their multiple books. (There are no co-authors in this case).

You might want to see if there's a pattern between author first names and their likelihood to write about .NET. Therefore, you want to write a query of authors, include books, and see how many of their books have the word “.NET” in the title.

The query:

var groupedAuthors=_context.Authors.Include(a=>a.Books)

.GroupBy(a=>a.FirstName)

.Select(g=>new

{g.Key,AuthorCount=g.Count(),dotNetBooks=g.Sum(a=>a.Books

.Count(b=>b.Title.Contains(".NET")))

}).ToList();

This query fails in earlier versions of EF Core because the GroupBy would not have provided the properties of the related data (Books) to be used by the Select method. The exception suggests revising the query to perform some of the work in a client-side query. In EF Core 6, it will now succeed. And the results of this query may highlight a surprising number of authors named Scott who have quite a few books on .NET.

There are two other GroupBy features that were possible in EF6 but haven't been in EF Core until now.

- The ability to select

Top Nfrom a group. - Using

FirstOrDefaulton groups.

You can read details of these in this GitHub issue if you're interested (https://github.com/dotnet/efcore/pull/25495). Or just go forth and group!

A More Intuitive CosmosDB Provider

I've saved the best for last because this was fun to explore. Improvements to the provider for accessing Cosmos DB were high on the wish list from the community. We've had the Cosmos provider since EF Core 3, so in case you're asking yourself “but why does an ORM need to work with a non-relational data store?” I answered that question in the EF Core 3 article referenced above. However, developers have asked why this non-relational provider got more love in this version than any of the relational providers. One reason, shared by Jeremy Likness, Sr. Program Manager for .NET Data at Microsoft, is that the Cosmos DB team gets “inundated with requests” for features in the Azure SDK from developers who prefer the EF Core APIs. In fact, if you look back at that EF Core 3 article, you'll see that my first reaction to the Cosmos provider even back then was "wow, it's so much easier to use than the SDK!". Another interesting data point from the survey was that MongoDB was the most requested provider. By investing in the Cosmos provider, the team is also paving the way for other non-relational providers. Yes, these are interesting points, but more interesting is that some of the new support for the provider in EF Core 6 better supports smart modeling for document database storage.

Richer Logging Details

First, I want to point out the new and improved support for logging events on the CosmosDB database. People requested details that would help them gain better insight into their resource use.

For example, Listing 2 shows logging output (filtered on LogLevel.Informaton) in EF Core 6 for a query executed with the CosmosDB provider.

Listing 2: Logging output from EF Core 6's Cosmos provider

dbug: 08/25/2021 14:22:18.213 CoreEventId.QueryExecutionPlanned[10107]

(Microsoft.EntityFrameworkCore.Query)

Generated query execution expression:'queryContext =>

new QueryingEnumerable<Person>(

(CosmosQueryContext)queryContext,

SqlExpressionFactory,

QuerySqlGeneratorFactory,

[Cosmos.Query.Internal.SelectExpression],

Func<QueryContext, JObject, Person>,

PeopleContext,

null,

False,

True)'

info: 08/25/2021 14:22:18.273 CosmosEventId.ExecutingSqlQuery[30100]

(Microsoft.EntityFrameworkCore.Database.Command)

Executing SQL query for container 'PeopleContext'

in partition '?' [Parameters=[]]

SELECT c

FROM root c

WHERE (c["Discriminator"] = "Person")

info: 08/25/2021 14:22:19.292 CosmosEventId.ExecutedReadNext[30102]

(Microsoft.EntityFrameworkCore.Database.Command)

Executed ReadNext (983.9663 ms, 2.86 RU)

ActivityId='5b500af5-77eb-4513-a14c-bd5a00d45c4c',

Container='PeopleContext', Partition='?', Parameters=[]

SELECT c

FROM root c

WHERE (c["Discriminator"] = "Person")

dbug: 08/25/2021 14:22:22.535 CoreEventId.ContextDisposed[10407]

(Microsoft.EntityFrameworkCore.Infrastructure)'PeopleContext' disposed.

The first section showing the QueryExecutionPlanned event is the same as in EF Core 5. However, the following two sections showing the database command are new. EF Core 6 is more closely aligned with the logging we've come to expect from the other relational providers. But looking more closely, notice the ReadNext details. That includes not only the total round-trip time on your database but also the RUs (resource units) that are key to how your Azure bill is calculated.

The provider has additional new capabilities, such as raw queries with CosmosFromSQL, as well as some fixes to its behavior. And I happen to agree with Jeremy Likness about two other favorites: implicit ownership and support for primitive collections and dictionaries. So I'll show you these two features and you can read about other Cosmos enhancements with this filtered GitHub search: https://bit.ly/EFC6Github.

Conventionally Nested Documents aka Implicit Ownership

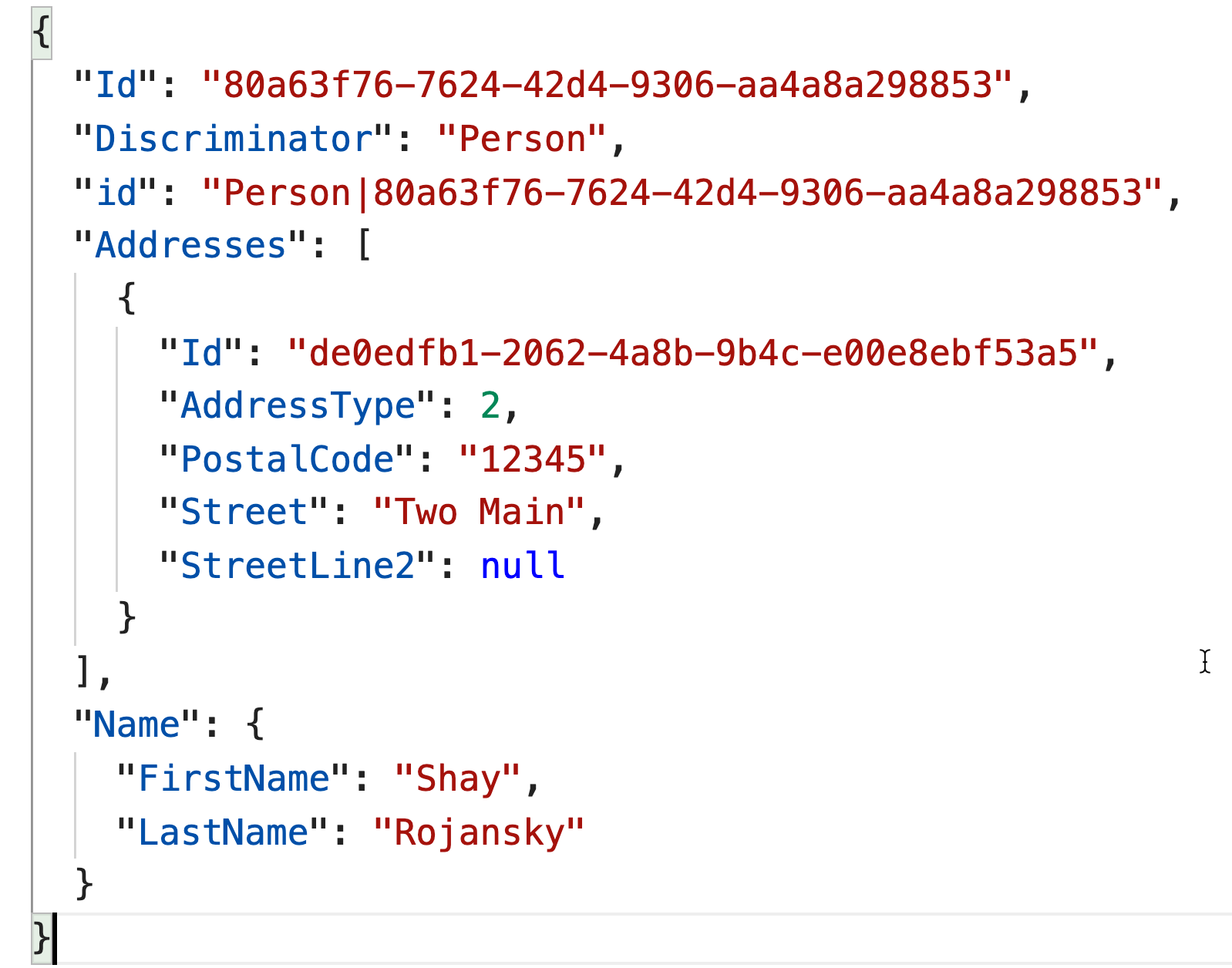

In document databases such as Cosmos DB, nested types are expressed naturally in the stored JSON structure. EF Core 6 recognizes this for what is otherwise known as owned entities, as well as certain relationships in your model.

Owned entities are EF Core's mechanism for identifying and persisting complex data types that are properties of entities. What differentiates these classes is that they don't have their own key property. With relational databases, it's tricky to store and retrieve data that's shaped this way and you're required to explicitly configure this relationship via the OwnsOne and OwnsMany mappings. In doing so, EF Core knows to infer key properties for the sake of persistence. Otherwise, EF Core assumes that the types are related but you forgot to specify a key property.

In the case of Cosmos DB where it's easy to store nested objects, it feels redundant to have to configure this relationship when it's the obvious way to shape it. This is where the new “implicit ownership” feature comes into EF Core 6. However, it goes beyond the types that you currently define as owned types. In EF Core 6, related dependents will also be stored as sub documents in Cosmos DB. Let's first look at the complex types, which may also be value objects in your system design.

There's not much to it. Let's say I have a type called PersonName where I have defined First and Last properties and a method to concatenate these properties.

public class PersonName

{

public string FirstName { get; private set;}

public string LastName { get; private set; }

public PersonName(string first, string last)

{

FirstName = first;

LastName = last;

}

public string FullName => $"{FirstName.Trim()}

{LastName.Trim()}";

}

There's no identity key and I have no reason at all to create a relationship between the two types. PersonName is a property of Person as well as some other classes. With a relational database provider, I'd have to configure PersonName as an Owned Entity of every type in which it's a property. When using the Cosmos provider (and let's just assume that this will resolve to future document database providers), I don't have to provide the configuration. PersonName will always be nested within a Person document.

{

"Id": "18369f48-c9c9-41e0-a6c1-427dcca4816b",

"Discriminator": "Person",

"id": "Person|18369f48-c9c9-41e0-a6c1-427dcca4816b",

"Name": {

"FirstName": "Andriy",

"LastName": "Svyryd"

}

}

You can also get nested documents with related entities - related types that do have identity keys - but only in a particular scenario.

In my little model of people and addresses (where only one person can live at a given address), the Person class has an Addresses property:

public List<Address> Addresses { get; set; }

The address type is a true entity with a key property. Therefore, I have a one-to-many relationship between Person and Addresses.

In my first business rule scenario, I know that I'll never interact with addresses directly - only as part of a person object - and therefore I haven't defined a DbSet for Addresses in the DbContext.

Because of these two attributes of my model, I can only create or modify addresses in code as part of a Person object. And the Cosmos provider automatically stores all addresses as nested objects within person objects, as shown in Figure 2.

In my second business scenario, I want my app to be able to retrieve and persist addresses separately from their related Person documents. Therefore, I've defined a DbSet<Address> in the DbContext. In response to this, EF Core convention stores addresses as separate documents.

A third scenario is this: I don't want to have a DbSet<Address> defined in my DbContext, but I still prefer to have the Addresses persisted separately from the Person objects for other apps to access directly. In this case, you can explicitly configure the container for the Address type. But it doesn't have to get stored in a separate container. If you explicitly specify the same container, the Addresses get stored alongside the Person objects in the same database container.

Here is the configuration I've used for that specific use case.

modelBuilder.Entity<Address> ().ToContainer(this.GetType().Name);

With the addresses stored separately, you can still interact with them through the Set<Address> method, if needed.

Intelligently Storing Collections and Dictionaries of Primitives

This is another feature that speaks to how differently document databases store their data from relational databases. And this capability also enhances how you model your entities when persisting them in a document database.

Here are two new properties in my person class: a list of strings to store all of a person's nicknames and a dictionary where I can schedule what type of chocolate to eat on each weekday.

public List<String> Nicknames{get;set;}

public Dictionary<string,string> DailyChocolate {get;set;}

EF Core has no way of conventionally persisting these properties. You'll be told that the type List<String> and the type Dictionary<Datetime,int> need keys defined. Well, you can't really do that! They aren't entities! You can use a complicated value converter to do the trick. Check out how in this section of the EF Core docs (https://bit.ly/ConvertListString). Surely there's a way to create a value converter for a Dictionary as well.

Storing these into a document database is very natural, so the new Cosmos provider handles it handily for you and by convention, so there are no mappings required.

I've set some nicknames and the chocolate schedule in code:

person.Nicknames = new List<string>

{ "Shay", "Roji", "Postgres Guy" };

person.DailyChocolate = new Dictionary<string, string>

{

{ "Monday", "Dark Chocolate" },

{ "Tuesday", "Salted Milk Chocolate" }

};

And then I call SaveChanges. Here's how that resolves in the stored document:

"Id": "429e66c1-f5e9-4750-98f9-c1de2b8a3758","DailyChocolate": {

"Monday": "Dark Chocolate",

"Tuesday": "Salted Milk Chocolate"

},

"Discriminator": "Person",

"Nicknames": [

"Shay",

"Roji",

"Postgres Guy"

],

When querying for that data, it's resolved as the original List and Dictionary in the resulting objects.

There's So Much More to EF Core 6

It's never possible to share every new feature and improvement in an article like this. Therefore, I've stuck to some of the most impactful features of EF Core 6 that will be interesting to most users. But there are still so many interesting things brought to EF Core in this version. For example, in the Cosmos provider, some other improvements are raw SQL support, support for the Distinct operator (with limitations) in queries, and scaffolding many-to-many relationships from existing databases. Thanks to community member Willem Meints, there's now support for String.Concat in queries. Free text search in queries is now more flexible thanks to JSON value converters. The EF Core in-memory database now throws an exception if an attempt is made to save a null value for a property marked as required.

There are hundreds of improvements. Some you will never need, some are subtle and you may not notice, but each one will have a subset of developers who will experience a serious benefit from its existence.

The What's New document in the EF Core docs details more of these higher impact changes (https://docs.microsoft.com/en-us/ef/core/what-is-new/ef-core-6.0/whatsnew).

Although the collective bucket lists of desires for EF Core both from the EF Core team and the community may be vast, EF Core 6 goes a long way to checking off so many of those items.